Scrape Center爬虫平台ssr3案例练习

ssr3 案例带有 HTTP Basic Authentication,适合用作 HTTP 认证案例,用户名密码均为 admin

ssr 系列

爬取目标

- 详情页链接

- 电影名称

- 评分

- 剧情简介

本地存储方式

- csv文件存储

验证

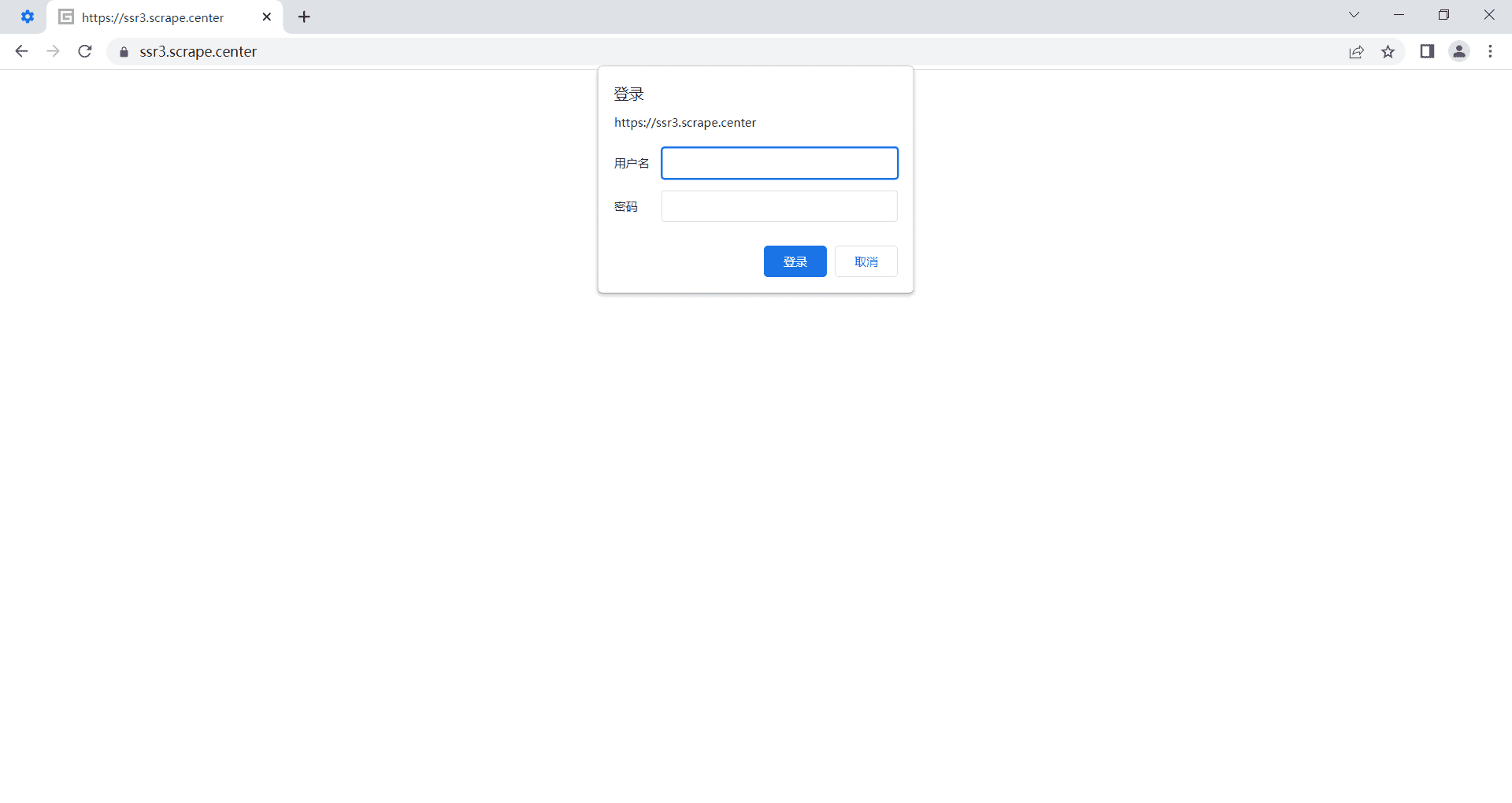

访问 https://ssr3.scrape.center 会弹出认证窗口:

无验证访问

如果直接爬虫访问:

1 | import requests |

则返回的结果如下:

1 | <html> |

意思是 需要401授权

带上验证访问

urllib 库验证

崔庆才给出的验证代码:

1 | from urllib.request import HTTPBasicAuthHandler, HTTPPasswordMgrWithDefaultRealm, build_opener |

requests 库验证

只需给

auth参数传入一个二元组

1 | import requests |

完整代码

爬取所有电影并存储到csv

urllib 库

1 | import re |

requests 库

1 | import re |

输出

data.csv部分内容:

1 | https://ssr1.scrape.center/detail/1,霸王别姬 - Farewell My Concubine,9.5,影片借一出《霸王别姬》的京戏,牵扯出三个人之间一段随时代风云变幻的爱恨情仇。段小楼(张丰毅 饰)与程蝶衣(张国荣 饰)是一对打小一起长大的师兄弟,两人一个演生,一个饰旦,一向配合天衣无缝,尤其一出《霸王别姬》,更是誉满京城,为此,两人约定合演一辈子《霸王别姬》。但两人对戏剧与人生关系的理解有本质不同,段小楼深知戏非人生,程蝶衣则是人戏不分。段小楼在认为该成家立业之时迎娶了名妓菊仙(巩俐 饰),致使程蝶衣认定菊仙是可耻的第三者,使段小楼做了叛徒,自此,三人围绕一出《霸王别姬》生出的爱恨情仇战开始随着时代风云的变迁不断升级,终酿成悲剧。 |