Scrape Center 是崔庆才发布的爬虫网站练习平台,本文爬取目标是无反爬的 ssr1 案例,爬虫技术使用Python第三方 requests 库和内置 re、csv 模块实现

爬取目标

本地存储方式

步骤

说明

ssr1网站有10个列表页(页面),每个列表页有10个详情页

从列表页获取详情页链接 -> 进入详情页 -> 提取电影名称、评分、剧情简介

每个列表页链接类似:

1

2

3

4

| https://ssr1.scrape.center/page/1

https://ssr1.scrape.center/page/2

...

https://ssr1.scrape.center/page/10

|

每个详情页链接类似:

1

2

3

4

| https://ssr1.scrape.center/detail/1

https://ssr1.scrape.center/detail/2

...

https://ssr1.scrape.center/detail/10

|

获取详情页链接

从列表页的 <a data-v-7f856186="" href="/detail/1" class="name"> 标签中提取

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| import re

import requests

page_url = 'https://ssr1.scrape.center/page/1'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.51 Safari/537.36 Edg/99.0.1150.36'

}

response = requests.get(page_url, headers=headers)

html = response.text

detail_url = re.findall(r'data-v-7f856186="" href="(.*?)"', html, re.S)

print(detail_url)

|

输出如下:

1

| ['/detail/1', '/detail/2', '/detail/3', '/detail/4', '/detail/5', '/detail/6', '/detail/7', '/detail/8', '/detail/9', '/detail/10']

|

从详情页提取数据

提取霸王别姬的电影名称、评分、剧情简介

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| import re

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.51 Safari/537.36 Edg/99.0.1150.36'

}

detail_url = 'https://ssr1.scrape.center/detail/1'

response = requests.get(detail_url, headers=headers)

html = response.text

movies_name = re.findall(r'class="m-b-sm">(.*?)</h2>', html, re.S)

rating = re.findall(r'm-b-n-sm">\n *(.*?)</p>', html, re.S)

plot_summary = re.findall(

r'<p data-v-63864230="">\n *(.*?)\n *</p></div>', html, re.S)

print(movies_name)

print(rating)

print(plot_summary)

|

输出如下:

1

2

3

4

| ['霸王别姬 - Farewell My Concubine']

['9.5']

['影片借一出《霸王别姬》的京戏,牵扯出三个人之间一段随时代风云变幻的爱恨情仇。段小楼(张丰毅 饰)与程蝶衣(张国荣 饰)是一对打小一起长大的师兄弟,两人一个演生,一个饰旦,一向配合天衣无缝,尤其一出《霸王别姬》,更是誉满京城,为此,两人约定合演一辈子《霸王别姬》。但两人对戏剧与人生关系的理解有本质不同,段小楼深知戏非人生,程蝶衣则是人戏不分。段小楼在认为该成家立业之时迎娶了名妓菊仙(巩俐 饰),致使程蝶衣认定菊仙是可耻的第三者,使段小楼做了叛徒,自此,三人围绕一出《霸王别姬》生出的爱

恨情仇战开始随着时代风云的变迁不断升级,终酿成悲剧。']

|

完整代码

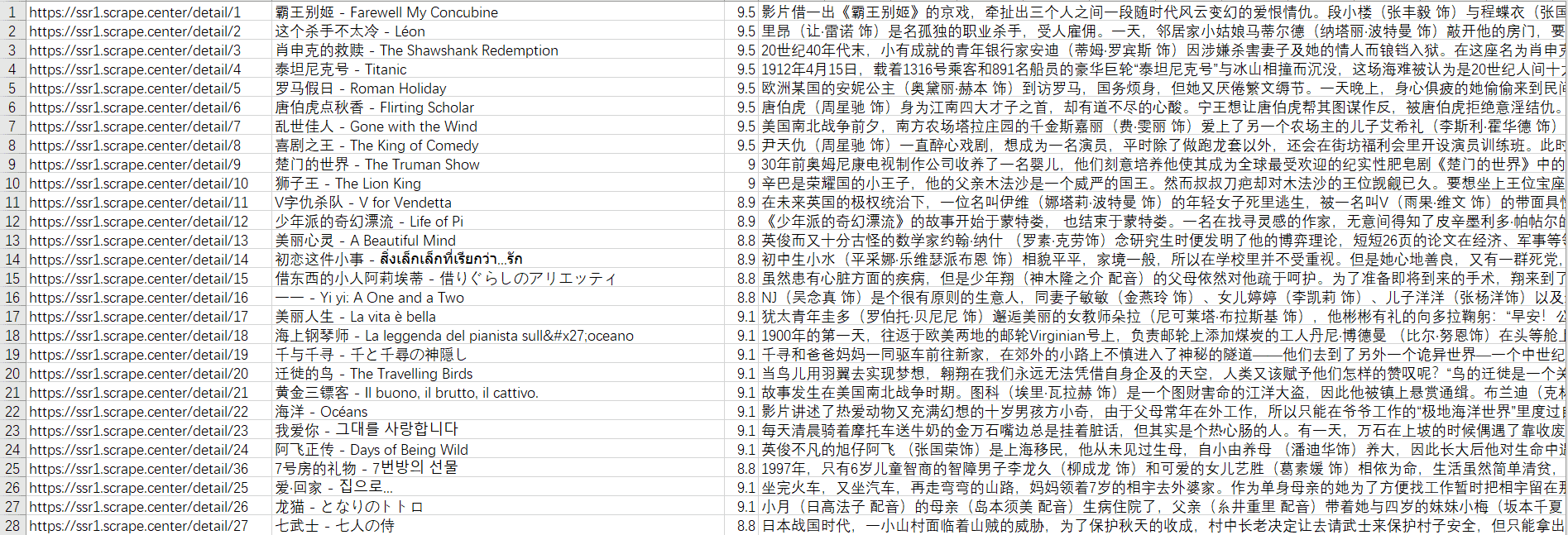

爬取所有电影并存储到csv

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

| import re

import csv

import time

import requests

from requests.packages import urllib3

urllib3.disable_warnings()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.51 Safari/537.36 Edg/99.0.1150.36'

}

def match(mode: str) -> list:

return re.findall(mode, html, re.S)

def writer_csv(data_list):

'''

将爬取的数据存储到 data.csv 文件

'''

with open('./data.csv', 'a+', newline='', encoding='utf-8-sig') as f:

writer = csv.writer(f)

writer.writerow(data_list)

def get_detail(detail_url_list):

'''

爬取链接、电影名称、评分、剧情简介

'''

global html

for detail in detail_url_list:

detail_url = f'https://ssr1.scrape.center{detail}'

print(f'正在爬取详情页 {detail_url}')

time.sleep(1)

response = requests.get(detail_url, headers=headers)

html = response.text

movies_name = match(r'class="m-b-sm">(.*?)</h2>')

rating = match(r'm-b-n-sm">\n *(.*?)</p>')

plot_summary = match(r'<p data-v-63864230="">\n *(.*?)\n *</p></div>')

data_list = [

detail_url,

movies_name[0],

rating[0],

plot_summary[0]

]

writer_csv(data_list)

def get_list_page(page):

'''

爬取详情页链接,用于下一步处理

'''

global html

time.sleep(1)

page_url = f'https://ssr1.scrape.center/page/{page}'

response = requests.get(page_url, headers=headers)

html = response.text

detail_url_list = match(r'data-v-7f856186="" href="(.*?)"')

get_detail(detail_url_list)

def main():

for page in range(1, 11):

get_list_page(page)

if __name__ == '__main__':

main()

|

data.csv 文件截图